| ACE 2 |

|---|

Overview

ACE (Autonomous Classification Engine) is a meta-learning software package for selecting, optimizing and applying machine learning algorithms to music research. Given a set of feature vectors, ACE experiments with a variety of classifiers, classifier parameters, classifier ensemble architectures and dimensionality reduction techniques in order to arrive at a good configuration for the problem at hand. This can be important, as different algorithms can be appropriate for different problems and types of data. ACE is designed to increase classification success rates, facilitate the application of powerful machine learning technology for users of all technical levels and provide a framework for experimenting with new algorithms.

ACE can be used to evaluate different configurations in terms of success rates, stability across trials and processing times. Each of these factors may vary in relevance, depending on the goals of the particular task under consideration.

ACE may also be used directly as a classifier. Once users have selected the classifier(s) that they wish to use, whether through meta-learning or using pre-existing knowledge, they need only provide ACE with feature vectors and model classifications. ACE then trains itself and presents users with trained models.

ACE is specifically designed to facilitate classification for those new to pattern recognition, both through its use of meta-learning to help inexperienced users avoid inappropriate algorithm selections and through its intuitive GUI (currently only partially complete). ACE is also designed to facilitate the research of those well-versed in machine learning, and includes a command-line interface and well-documented API for those interested in more advanced batch use or in development.

ACE is built on the standardized Weka machine learning infrastructure, and makes direct use of a variety of algorithms distributed with Weka. This means that not only can new algorithms produced by the very active Weka community be immediately incorporated into ACE, but new algorithms specifically designed for MIR research can be developed using the Weka framework. ACE can read features stored in either ACE XML or Weka ARFF files.

Changes in ACE 2

ACE 2.0 is the current release version of ACE, and includes several important updates relative to the original ACE 1.x:

- Improved API: The overall architectural structure of ACE 2.0 has been entirely redesigned in order to simplify and facilitate the use of ACE as a library by external software.

- Improved Command Line Interface: The command line interface has been improved in order to make its use more intuitive and in order to provide users with additional ways of customizing processing.

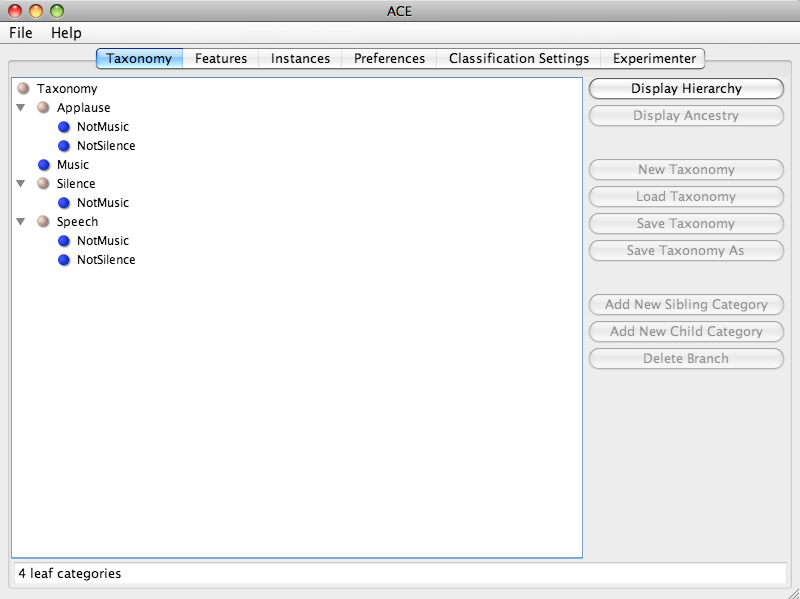

- Updated GUI: A GUI is now available for viewing and editing ACE XML files. Meta-learning functionality is still restricted to the command line interface and API at this point, however, although there are plans to expand the GUI functionality.

- Improved Cross-Validation: ACE now performs its own customized cross-validation in order to provide new options and more statistics than were available when Weka's cross-validation implementation that was previously used.

- ACE XML ZIP and Project Files: The ACE XML Project and ZIP files allows users to associate multiple ACE XML files together so that they may be automatically saved or loaded together, and the ZIP format makes it possible to package all files referred to in a Project file into a single compressed ZIP file.

Screen Shot (GUI still under development)

Manual

Additional functionality beyond what is described here is available in ACE 2's manual.

Related Publications and Presentations

McKay, C. 2010. Automatic music classification with jMIR. Ph.D. Thesis. McGill University, Canada.

McKay, C., J. A. Burgoyne, J. Hockman, J. B. L. Smith, G. Vigliensoni, and I. Fujinaga. 2010. Evaluating the genre classification performance of lyrical features relative to audio, symbolic and cultural features. Proceedings of the International Society for Music Information Retrieval Conference. 213–8.

McKay, C., and I. Fujinaga. 2010. Improving automatic music classification performance by extracting features from different types of data. Proceedings of the ACM SIGMM International Conference on Multimedia Information Retrieval. 257–66.

McKay, C., J. A. Burgoyne, and I. Fujinaga. 2009. jMIR and ACE XML: Tools for performing and sharing research in automatic music classification. Presented at the ACM/IEEE Joint Conference on Digital Libraries Workshop on Integrating Digital Library Content with Computational Tools and Services.

Thompson, J., C. McKay, J. A. Burgoyne, and I. Fujinaga. 2009. Additions and improvements to the ACE 2.0 music classifier. Proceedings of the International Society for Music Information Retrieval Conference. 435–40.

McKay, C., and I. Fujinaga. 2008. Combining features extracted from audio, symbolic and cultural sources. Proceedings of the International Conference on Music Information Retrieval. 597–602.

McKay, C., and I. Fujinaga. 2007. Style-independent computer-assisted exploratory analysis of large music collections. Journal of Interdisciplinary Music Studies 1 (1): 63–85.

McKay, C., R. Fiebrink, D. McEnnis, B. Li, and I. Fujinaga. 2005. ACE: A framework for optimizing music classification. Proceedings of the International Conference on Music Information Retrieval. 42–9.

McKay, C., D. McEnnis, R. Fiebrink, and I. Fujinaga. 2005. ACE: A general-purpose classification ensemble optimization framework. Proceedings of the International Computer Music Conference. 161–4.

Sinyor, E., C. McKay, R. Fiebrink, D. McEnnis, and I. Fujinaga. 2005. Beatbox classification using ACE. Proceedings of the International Conference on Music Information Retrieval. 672–5.

Questions and Comments